Intelligent Data Provisioning: From Data Swamps to AI-Ready Assets for UK Enterprises (8 Part)

Table of Contents

UK enterprises are sitting on vast data estates, yet most of that data never becomes usable value—trapped in ungoverned “data swamps” that are too slow to access, too hard to trust, and too risky to share. As AI systems become continuous consumers of data rather than one-off projects, the old playbook of batch pipelines, ticket-driven access, and siloed governance no longer works.

What’s needed is a shift to modern data provisioning: delivering the right data to the right user for the right purpose, with controls and evidence baked in from the start. This paper outlines a practical path—from assessing data health and building semantic layers, to enabling zero-trust collaboration and generating the audit-ready evidence that regulators increasingly expect. For organisations ready to move from “we think we’re compliant” to “we can show the evidence,”

the journey starts with treating data not as a storage problem, but as a provisionable asset

Part 1) The data crunch UK organisations feel every day

Across UK financial services, healthcare, and large enterprises, the data problem is rarely “we don’t have enough data.” It’s that the data estate has become too hard to trust, too slow to access, and too risky to share.

Many organisations built data lakes in order to move quickly—ingest first, structure later. Over time, “later” never arrives. What you get is the well-known slide from data lake → data swamp, where data becomes disorganised and governance is missing, making it difficult to find what you need and use it safely.

And the opportunity cost is enormous. In a large global study (IDC sponsored by Seagate), only 32% of available data is “put to work.” That means most data value is stranded—sitting in storage whilst AI and analytics teams spend time hunting, cleaning, and re-explaining the same assets.

Why traditional approaches keep failing

- ETL-first thinking doesn’t scale to AI demand Batch pipelines and rigid transformations were built for predictable reporting. AI workloads are the opposite: they’re iterative, data-hungry, and constantly evolving.

- Ticket-driven access turns “time-to-data” into “time-to-missed-opportunity” If access requires manual approvals, ad-hoc exports, and spreadsheet-based tracking, the organisation teaches teams to route around process—and that’s where shadow copies and compliance surprises appear.

- Siloed governance creates contradictory rules When “governance” lives separately from daily provisioning, teams either ignore it or re-implement it inconsistently. Either way, you lose the audit trail and the ability to explain decisions.

The headline: AI doesn’t just need data. It needs data that’s provisionable—discoverable, understandable, policy-controlled, and evidence-ready.

Part 2) What modern data provisioning actually means

Modern data provisioning is the discipline of delivering the right data to the right user or system for the right purpose—with controls and evidence baked in, not bolted on.

Traditional vs modern provisioning (the practical difference)

- Static copies → policy-driven access: fewer unmanaged extracts, more governed delivery.

- Manual approvals → metadata-triggered workflows: decisions become consistent and repeatable.

- Whole datasets → just-in-time slices: you provision only what’s needed for the job.

- Security after the fact → zero-trust by design: assume breach and minimise blast radius.

The 5 building blocks (an usable mental model)

- Automated discovery & classification Continuously inventory assets, understand sensitivity, and tag what matters.

- Metadata-driven policy engine Policies should read like business intent (purpose, role, sensitivity), not tribal knowledge.

- Dynamic access control Time-bound, purpose-bound, and revocable access beats “forever access” every time.

- Federation across estates Multi-cloud and hybrid are the norm. Provisioning should work across boundaries.

- Audit & observability If you can’t explain who accessed what and why, you don’t truly control it.

Why this matters now

Two forces are converging:

- AI systems are continuous consumers of data, not one-off projects.

- Regulators increasingly care about operational evidence, not marketing claims—especially as AI rules harden around governance and documentation expectations. For UK organisations, alignment with evolving domestic frameworks—alongside interoperability with EU standards for cross-border operations—remains a practical priority.

Part 3) The foundation: data health & healing before you provision

Provisioning broken data at speed just spreads damage faster. So modern programmes start with data health: measuring whether an asset is ready to be consumed reliably.

A practical data health score (straightforward to operationalise)

You can implement this as a lightweight rubric (it doesn’t need to be perfect to be useful):

- Accuracy: does it reflect reality? (validation rules, anomaly checks)

- Completeness: are key fields populated? (missingness thresholds)

- Freshness: is it up to date for the use case? (SLA and lag monitoring)

- Accessibility: is it provisionable without heroics? (clear ownership + access path)

Set a threshold (e.g., “publishable” vs “needs remediation”) and enforce it before datasets appear in self-service portals.

Common “data diseases” that create swamps

- Data rot: assets no one uses but everyone is afraid to delete

- Duplication syndrome: multiple “versions of truth”

- Schema drift: fields change without notice, pipelines silently break

- Access paralysis: data exists, but nobody can access it safely

These problems are precisely how data lakes degrade into swamps over time.

Healing strategies that work in real environments

- Automated cleansing pipelines

- Validate at ingestion (types, ranges, referential checks)

- Apply consistent quality rules (e.g., Great Expectations / Deequ style assertions)

- Schema evolution management

- Version schemas and enforce backwards compatibility where possible

- Capture change events as first-class metadata

- Synthetic data injection (for safe testing + coverage gaps) When teams can’t share production data—or when edge cases are too rare—synthetic data can help create safer, repeatable datasets for testing and model evaluation. The key is to pair generation with utility and privacy risk evaluation so teams know what the data is fit for.

A 4-week “rescue” pattern (repeatable)

- Week 1: Discovery – inventory + ownership mapping

- Week 2: Classification – sensitivity labels + usage criticality

- Week 3: Archiving – remove ROT assets, document retention decisions

- Week 4: Governance – publish policies and make them executable

This is how you stop the “storage grows, trust shrinks” cycle—particularly important when studies show most data never becomes usable value.

Part 4) Semantic layer: making provisioning business-friendly

Provisioning fails when only technical teams can interpret assets.

A semantic layer is the translation layer between raw structures and business meaning—so “customer”, “revenue”, and “risk exposure” are consistent across tools, teams, and AI systems.

Why it matters for provisioning (not just BI)

Provisioning decisions become dramatically simpler when policies can reference meaning:

- “Marketing can access aggregated customer segments”

- “Researchers can access de-identified cohort-level stats”

- “AI agents can access approved features with lineage recorded”

Two implementation patterns

Pattern A: Lightweight semantic views

- SQL views or dbt models that standardise business metrics

- Well-suited for single data warehouses and smaller estates

Pattern B: Universal semantic layer

- A dedicated layer serving multiple BI tools and data consumers

- Better for enterprise estates with multiple sources and teams

Ontology: the next step when you need richer context

An ontology is a formal model of entities and relationships (customers, products, transactions, encounters, etc.). It becomes valuable when you need:

- context-aware access controls

- stronger lineage and traceability

- richer grounding for LLM and agentic AI retrieval

Part 5) Zero-trust collaboration: sharing without losing control

Every regulated organisation hits the same paradox:

- Collaboration is a business requirement (partners, vendors, internal teams).

- Uncontrolled sharing creates AI privacy risk and audit exposure.

Zero-trust provisioning offers a pragmatic middle path: never trust, always verify—and design every workflow as if a breach will happen.

What “zero-trust provisioning” looks like in practice

- Identity verification before data access

- Least-privilege permissions (role + purpose + duration)

- Continuous monitoring and revocation

- Evidence logs that survive organisational changes and tool changes

Where synthetic data fits

Synthetic data is often the most practical way to enable collaboration when:

- production data is too sensitive to export

- test environments need realistic distributions

- vendors must validate models without seeing raw records

The win is not “synthetic data replaces everything.” The win is: it creates a safer default collaboration dataset—when paired with validation and risk checks.

Part 6) AI governance: provisioning and governance are inseparable now

Governance becomes real when it’s operational—when you can produce evidence of controls, not just describe them.

A strong signal in Europe is how AI regulation is trending toward documentation and standardised disclosure artefacts, not vague promises. For example, the European Commission has published a template and explanatory materials for a public summary of training data content for general-purpose AI models—showing how compliance often becomes “fill this format with evidence you can prove.”

For UK organisations, whilst domestic frameworks continue to evolve, maintaining alignment with EU standards remains prudent for those with cross-border operations or European clientele.

The five governance pillars that matter in provisioning

- Transparency: who accessed what, when, and for what purpose

- Explainability: why access was granted or denied

- Fairness: consistent access rules across comparable roles

- Accountability: clear owners for datasets and policies

- Compliance-readiness: evidence artefacts generated as a by-product of operations

Evidence-first provisioning (the approach UK teams can action)

Build an internal “evidence pack” per critical dataset:

- Data source and rights/permissions notes

- Lineage and transformation history

- Quality checks and threshold results

- Access logs (purpose + time + user/system)

- Retention decisions and change history

This evidence-first stance aligns well with the direction of emerging governance expectations across both UK and EU jurisdictions.

Part 7) UK industry patterns (what shows up most in practice)

Rather than guessing named case studies, here are the most common UK-regulated patterns that consistently drive provisioning programmes:

Pattern A: “We have a lake, but nobody trusts it”

- Duplicate datasets, unclear owners, and inconsistent definitions

- Teams rebuild the same transformations in parallel

Provisioning fix: data health gates + semantic layer + ownership enforcement

Pattern B: “We need vendors to validate models, but we can’t share production data”

- Testing, model validation, and analytics partnerships stall

Provisioning fix: synthetic collaboration datasets + risk/utility validation + tight policy controls

Pattern C: “Healthcare-style complexity: many systems, high sensitivity, slow access”

European healthcare data governance is moving toward standardised infrastructure and cross-ecosystem interoperability. The European Health Data Space (EHDS) direction and related governance expectations increase the pressure for clean metadata, access control, and traceable usage.

For medical AI and medical device contexts, EU guidance on how AI rules interplay with existing medical device frameworks highlights the same theme: traceability, controlled change, and documented controls. UK healthcare organisations increasingly find value in aligning with these standards, particularly those serving international patients or conducting cross-border research.

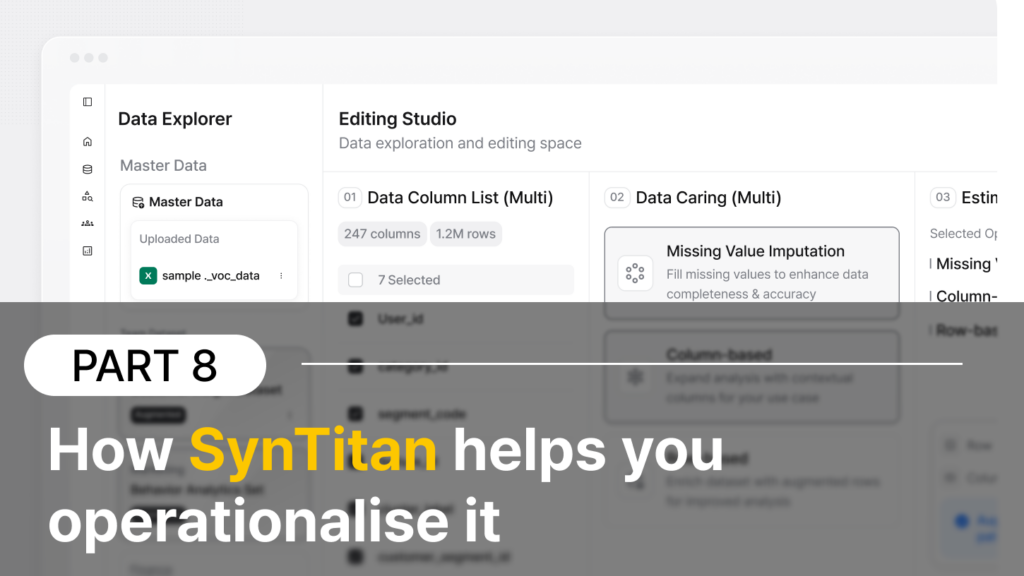

Part 8) A practical stack—and how SynTitan helps you operationalise it

Most UK teams don’t fail because they lack tools. They fail because tools don’t connect into an end-to-end operating model—from data health → semantic meaning → controlled provisioning → evidence artefacts.

A modern tool stack (what it usually includes)

- Discovery & cataloguing (inventory + ownership + classification)

- Semantic layer (business glossary + shared metrics)

- Policy & access control (purpose/time/role controls)

- Audit & observability (logs, anomaly detection, evidence packs)

- Synthetic data capability (safe collaboration and testing datasets)

The shift that unlocks budgets: “evidence-ready operations”

Across Europe, the trend is clear: regulations increasingly translate into specific documentation outputs and traceable operating evidence—not just “secure by design” statements. The Commission’s move to publish structured templates for training data summaries is a strong indicator of what “compliance” often becomes in practice: repeatable artefacts generated from your operating process.

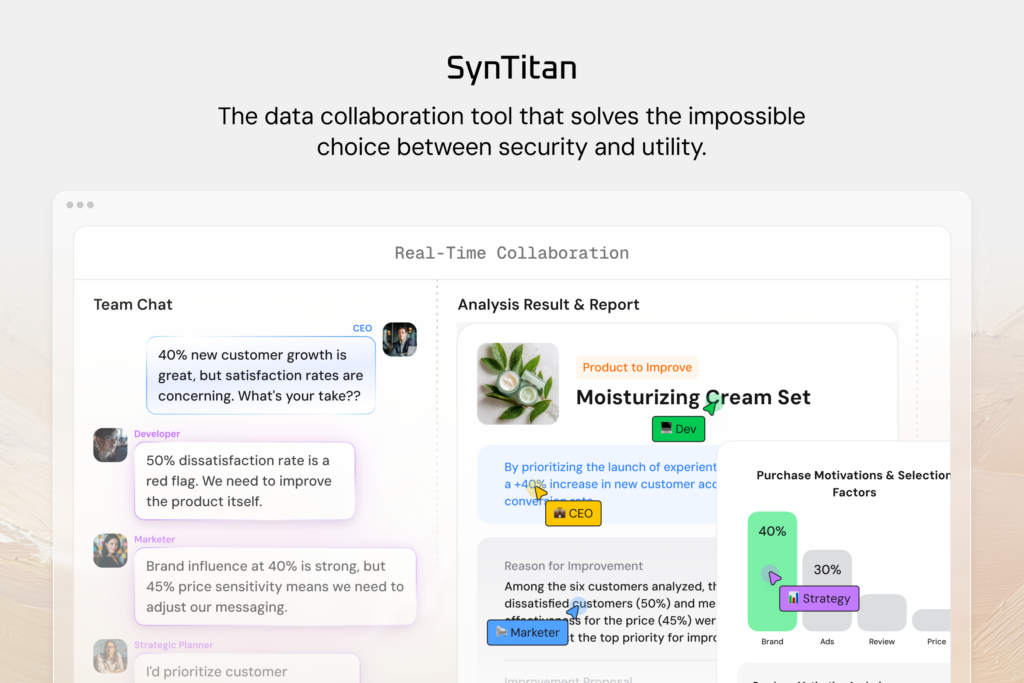

Where SynTitan fits (and why it’s different)

SynTitan is built around one practical goal: turning data into AI-ready assets you can use, share, and govern—with evidence.

In a provisioning programme, SynTitan is typically used to:

- Assess and improve data readiness (profiling and quality/consistency checks as part of “data health”)

- Create safer datasets for collaboration (including synthetic datasets for testing, vendor validation, and cross-team workflows)

- Support governance outcomes by keeping readiness work, validation outputs, and collaboration workflows connected—so evidence isn’t scattered across tools and spreadsheets

A simple “next step” UK teams can act on this week

If you’re evaluating modern data provisioning, start with an “Evidence Requirements Map” for one high-value domain (fraud, customer analytics, clinical operations, etc.):

- What deadlines or oversight expectations apply?

- Which policy documents matter for your organisation? (Both UK domestic frameworks and EU standards may be relevant for cross-border operations)

- What artefacts would you need in an audit or procurement review? (logs, lineage, quality reports, access decisions)

- Which of those artefacts can be generated automatically from your provisioning workflow?

SynTitan is designed to help teams move from “we think we’re compliant” to “we can show the evidence”—whilst also improving the day-to-day reality of AI delivery: faster access, higher trust, and safer collaboration.

If you want to pressure-test this quickly: choose one dataset that is currently hard to share or hard to use for AI, define the target provisioning policy (purpose + duration + sensitivity), and design a synthetic collaboration dataset plus a minimal evidence pack as the deliverable. That single slice is often enough to prove value and unlock a scaled programme.

CUBIG's Service Line

Recommended Posts