Data Catalogs for AI: The Foundation for Governance, Agents, and Sovereign AI (7 Parts)

Views: 0

Table of Contents

Summary

As organizations shift from analytics-first to AI-first, a familiar problem resurfaces: your data exists, but it’s hard to find, hard to trust, and harder to govern. Data catalogs have emerged as the structural fix—not just for discovery, but for AI governance, Sovereign AI compliance, and safe multi-agent operations.

This guide walks through why data catalogs became a board-level priority, the six layers of a mature catalog, and how catalogs support both regulatory requirements and agentic workflows. We also provide a 90-day implementation checklist and show how cataloged data becomes AI-ready through operational workflows.

Part 1) Why Data Catalogs Became a Board-Level Priority

A few years ago, “data catalog” sounded like an internal tooling project—useful, but rarely urgent. That changed as organizations shifted from analytics-first to AI-first delivery.

Here’s the shift: AI programs (especially generative AI and agentic workflows) don’t just need “more data.” They need trusted, well-documented, governable data that can be safely reused across teams and systems. When metadata is missing, ownership is unclear, and access decisions rely on manual ticketing, your “data lake” starts behaving like a data swamp—data exists, but it’s hard to find, hard to trust, and harder to govern at scale.

In that environment, teams tend to do predictable things:

- Duplicate datasets “just in case” (creating conflicting versions of truth)

- Work around governance (shadow pipelines, spreadsheet exports, unofficial copies)

- Underuse valuable data because it’s too risky or too slow to access

A data catalog is the structural antidote: it’s the layer that makes datasets discoverable, understandable, and governable—and that matters directly to AI delivery speed and privacy assurance.

Part 2) What a Data Catalog Is (and What It Isn’t)

In plain terms:

A data catalog is a metadata-driven system that helps organizations discover, understand, and govern data assets (tables, files, dashboards, models, APIs) by capturing technical metadata, business context, and governance signals.

Many organizations start with the “inventory” view (datasets + owners). Mature implementations typically span six functional layers:

1) Discovery & Inventory What exists, where it lives (warehouse, lake, SaaS), who owns it

2) Technical Metadata Schemas, data types, partitions, refresh cadence, connectors (For example, cloud ecosystems describe catalog components as metadata stores for data assets.)

3) Business Context Business glossary, definitions (“revenue”, “active customer”), domain tags

4) Sensitivity & Privacy Signals Classification labels (PII, financial, health), usage constraints, consent tags

5) Lineage & Change Visibility Where data came from, what transforms touched it, what breaks if it changes

6) Access + Audit Hooks Which roles used what, for what purpose, and when—so governance is provable

What a data catalog is not:

- Not just documentation in a wiki

- Not only a list of datasets

- Not a replacement for data quality tooling (but it should integrate with it)

If you need a single mental model: a data catalog becomes the control plane for trusted data use.

Part 3) Sovereign AI: Where Catalogs Become the Compliance Backbone

“Sovereign AI” refers to AI systems developed, operated, and controlled within a specific jurisdiction, aligned with local regulations, priorities, and autonomy requirements.

Whether your organization is a public-sector body, a regulated enterprise, or a global company operating across regions, Sovereign AI pressure usually surfaces as practical questions:

- Where is the data stored and processed?

- Who can access it, and on what basis?

- Can we demonstrate compliance and governance decisions later?

- Do we have the artifacts auditors and procurement teams require?

This is where data catalog discipline becomes critical. A catalog supports Sovereign AI by making sovereignty operational (not aspirational) through:

A) Metadata that encodes jurisdiction + constraints Region, residency, retention class, allowed purpose, transfer restrictions

B) Auditability and “proof” UK GDPR strongly emphasizes accountability—organizations should be able to demonstrate compliance, not just claim it. A catalog-led approach makes evidence easier to produce because decisions and usage can be traced.

C) Evidence artifacts for modern AI governance In Europe, regulatory momentum increasingly pushes organizations toward structured transparency outputs. For example, the European Commission has published an explanatory notice and template related to public summaries of training content for general-purpose AI models—an illustration of how “documentation outputs” are becoming standardized.

Key takeaway: Sovereign AI isn’t only about model hosting. It’s about whether your organization can run AI with controllable data flows and auditable governance—and a data catalog is one of the most practical foundations for that.

Part 4) Multi-Agent Systems: Why Agents Need Cataloged Context

Once you introduce agents, data risk compounds:

- Agents retrieve data, transform it, pass it to tools, and generate outputs

- Small metadata gaps can cascade into operational failures

- Without clear constraints, agents can “overreach” into sensitive domains

A data catalog reduces agent risk by providing machine-usable context and human-governable rules:

1) Agents need “meaning,” not just tables If agents can’t reliably interpret fields, definitions, or lineage, you get brittle automation. Business glossaries and semantic tags help agents choose the right sources.

2) Agents need guardrails grounded in metadata Purpose, role, sensitivity tags, and allowed usage windows should be readable by governance systems (and ideally enforceable).

3) Agents need provenance and traceability When an agent output drives a decision, teams will ask: Which datasets were used? Which version? Under what policy? Catalog lineage and audit hooks support those answers.

In practice, when teams complain that “our agents are inconsistent,” the root cause often isn’t the agent itself—it’s uncataloged, low-trust data feeding them.

Part 5) The Reference Architecture: Catalog → Policy → Provisioning → Audit

Here’s a practical reference model:

1) Data Catalog (truth about data) Inventory + metadata + glossary + classifications + lineage pointers

2) Policy (truth about who can use it and why) Rules that map roles/purposes/locations to allowed datasets

3) Provisioning (how data is delivered safely) Approved, policy-constrained access patterns: views, masked extracts, short-lived access, or synthetic datasets

4) Audit & Observability (proof and monitoring) Logs and traces that show: who accessed what, when, for what purpose, under what policy

This architecture matters because it scales across:

- Multi-cloud estates

- Multiple business units

- External collaborators (vendors, agencies, research partners)

- Multi-agent workflows that generate significant “data touches”

A useful rule of thumb: if it isn’t cataloged, it isn’t governable. And if it isn’t auditable, it won’t survive procurement and regulatory pressure for long.

Part 6) Implementation Checklist (What to Do in 30–90 Days)

Days 1–30: Establish the catalog baseline

- Identify 2–3 priority domains (e.g., customer, finance, operations)

- Build the inventory: systems, datasets, owners, refresh cadence

- Start classification: sensitivity labels and basic constraints

- Create a minimal business glossary for high-traffic metrics

Deliverables:

- A usable catalog for priority domains

- Ownership map (who approves what)

- Initial sensitivity taxonomy

Days 31–60: Connect governance to real workflows

- Tie catalog metadata to access workflows (even if manual initially)

- Define “gold” datasets and deprecate unofficial duplicates where feasible

- Add lineage for critical pipelines (source → transforms → serving)

Deliverables:

- Policy draft for top 10–20 datasets

- First lineage map

- Basic audit trail for access decisions

Days 61–90: Make it AI-ready (agents and Sovereign AI pressure-tested)

- Add purpose-based usage tags (analytics, model training, testing)

- Introduce safer provisioning patterns: masked views, time-bounded access, and synthetic data for collaboration

- Define metrics: time-to-data, percentage of cataloged critical datasets, audit coverage

Deliverables:

- A repeatable provisioning pattern

- An “AI-ready dataset” checklist

- Evidence pack structure for audits/procurement

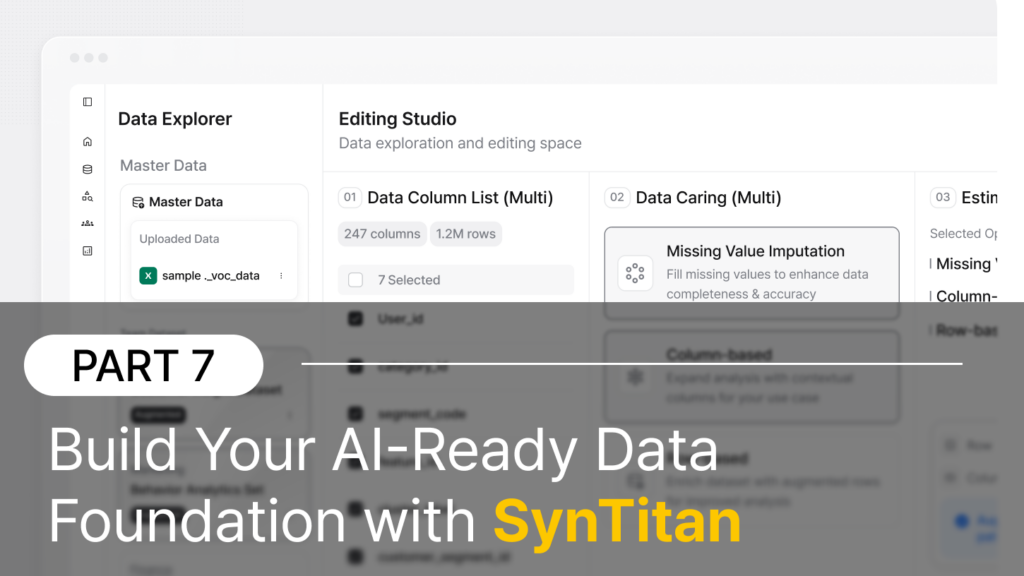

Part 7) Build Your AI-Ready Data Foundation with SynTitan

The principles we’ve covered—discovery, governance, auditability, safe provisioning—only matter if you can operationalize them without slowing your teams down.

That’s exactly what SynTitan is built for.

SynTitan is a data governance platform that helps organizations prepare, protect, and provision data for AI—at speed and at scale.

Privacy-Preserving Synthetic Data

Generate high-fidelity synthetic datasets that preserve analytical value while eliminating privacy risk. Share data across teams, partners, and borders without exposing sensitive information.

Built-In Governance & Compliance

Every transformation is logged. Every output is traceable. SynTitan produces the evidence artifacts that auditors, regulators, and procurement teams require—so compliance becomes a byproduct, not a bottleneck.

AI-Ready Output

Whether you’re training models, testing agents, or enabling cross-functional collaboration, SynTitan ensures your data is clean, consistent, and safe to use.

Enterprise-Grade Security

Designed for regulated industries—finance, healthcare, public sector—where data sovereignty and privacy aren’t optional.

Ready to Get Started?

If your AI initiatives are blocked by privacy constraints, slow data access, or governance gaps, let’s talk.

→ Request a Demo to see how SynTitan can accelerate your path to AI-ready data.

FAQ

Q: What does “AI-ready data” mean?

A: AI-ready data is data that has been cataloged, validated, and prepared for safe use in AI systems. It includes proper metadata, business context, governance controls, sensitivity classifications, and lineage tracking. While cataloged data tells you “what exists and how it should be governed,” AI-ready data is operationally usable—meaning it’s been standardized, privacy-protected where needed, and provisioned through auditable workflows. This ensures AI systems (including multi-agent workflows) can use data that is discoverable, trustworthy, and compliant with privacy regulations.

Q: What is SynTitan and how does it make data AI-ready?

A: SynTitan is a platform designed to operationalize AI-ready data workflows, especially where privacy constraints and safe collaboration are critical. While data catalogs provide the “control plane” for discovering and governing data, SynTitan focuses on the “execution plane” by:

- Standardizing & preparing cataloged data for AI consumption

- Generating privacy-preserving synthetic datasets for safe sharing with external teams, vendors, or across borders

- Producing verification outputs that document what data was used, under what policy, and with what privacy controls—making compliance provable

Think of it as the bridge between “we know what data we have” (catalog) and “we can safely use it for AI” (SynTitan).

Q: When should you use synthetic data instead of real data for AI?

A: Use synthetic data when:

- Privacy regulations prohibit sharing real data (GDPR, HIPAA, Sovereign AI residency rules)

- Collaborating with external parties (vendors, research partners, offshore teams)

- Testing and development environments need production-like data without privacy risk

- Data is too sensitive for broad access (healthcare records, financial transactions, PII)

- Cross-border collaboration is required (synthetic data bypasses data residency restrictions)

SynTitan ensures synthetic data preserves statistical properties and patterns from real data, so AI models perform comparably while eliminating privacy and compliance risks. Verification outputs prove the synthetic data quality and document the privacy controls applied.

CUBIG's Service Line

Recommended Posts