On Data Privacy Day, What Standards Do We Need to Use AI Safely?

Views: 0

Table of Contents

On Data Privacy Day, What Standards Do We Need to Use AI Safely?

Hello, this is CUBIG, working to ensure enterprise data can actually be used in AI-driven work.

It has become difficult to find organizations that have not experimented with AI.

Generative AI and other tools have spread rapidly, and most enterprises have already gone through multiple pilots and proofs of concept. From a purely technical perspective, there seems to be little reason why AI should not work.

Yet in practice, AI remains cautiously handled.

Rather than being embedded into everyday workflows, it is often confined to limited, controlled use cases. This gap is rarely a problem of models or algorithms. More often, it stems from how data is governed, evaluated, and approved for use.

On January 28, Data Privacy Day, this gap becomes clearer.

The real question is not how well data is protected, but whether organizations have clear standards that allow data to be used responsibly under privacy constraints.

Plenty of Data, but No Clear Basis for Decisions

Most organizations already possess large volumes of data.

CRM systems, ERP platforms, internal operational systems, logs, and analytics pipelines — the challenge is rarely a lack of data.

The problem emerges the moment teams attempt to use it.

Concerns about personal data exposure, inconsistent access rules across departments, and regulatory risks during external collaboration all converge. As a result, data gradually shifts from being an asset for decision-making to something that must simply be managed and contained.

In many enterprises, data exists, but no one can confidently say whether it can be used.

Global research repeatedly highlights this issue.

AI initiatives fail to move beyond experimentation not because of weak models, but due to unclear data governance, limited trust in data quality, and the absence of repeatable decision standards. This challenge affects everything from AI in data analytics to broader AI in the enterprise.

Privacy Is Not a Constraint — It Demands an Operating Standard

Privacy is often perceived as a blocker to AI adoption.

The assumption is simple: the more protection is applied, the less usable data becomes.

Recent regulatory trends suggest otherwise.

Frameworks such as GDPR and the EU AI Act do not prohibit AI usage. Instead, they require organizations to explain which data was used, under what conditions, and based on which decisions. In other words, privacy regulation does not remove data from AI — it demands accountability.

The issue is that many organizations treat these requirements as post-hoc compliance checks. When privacy and governance are not embedded into data operations from the beginning, AI projects inevitably stall before reaching real-world use.

This is why defining and enforcing data governance standards — rather than reacting to them — has become essential for any organization working with AI and data analytics.

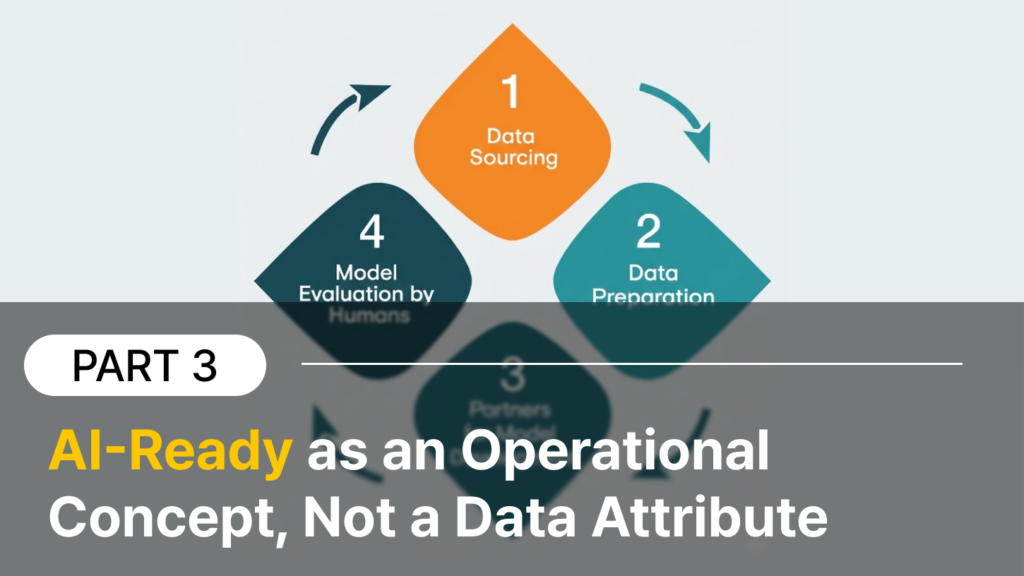

AI-Ready Is Not a Data State, but an Operational Language

AI-Ready data is often misunderstood as “clean” or “prepared” data.

This interpretation falls short. Data constantly changes. Distributions shift. Regulations evolve. A dataset prepared once cannot support AI operations indefinitely.

AI-Ready is not about the state of data.

It is about the language organizations use to make data decisions.

Which data is appropriate for a specific purpose?

What risks or constraints apply?

How was that decision made, and can it be reviewed later?

These questions must be answered continuously, documented explicitly, and applied consistently. Analysts, engineers, and governance teams need a shared operational understanding — not just technical pipelines.

Industry research, including Gartner’s work on data and AI, consistently frames AI-Ready not as an output, but as an ongoing data governance process. This perspective applies whether organizations are exploring data mesh, real-time analytics, or AI for business intelligence.

Stricter Privacy Constraints Make Standards Even More Critical

In reality, many organizations cannot move raw data freely.

Network separation, export approvals, and internal security controls are no longer exceptions — they are the default, especially in regulated industries and the public sector.

As a result, AI initiatives stall not due to insufficient data, but because data cannot be shared or validated safely.

This shifts the core question.

It is no longer “How do we protect raw data?”

Protection is assumed.

The more important question becomes:

What forms of data use are possible under these constraints?

Increasingly, organizations are adopting approaches where raw data remains internal, while analysis, validation, and model testing rely on controlled derivatives such as synthetic data or verified outputs. In this model, privacy is not an obstacle to AI — it defines how AI is designed and operated.

Data Privacy Day: Rethinking Data Standards for the AI Era

Data Privacy Day is not simply a reminder to protect information.

For organizations already investing in AI agents, AI for data analytics, or broader artificial intelligence models, it is an opportunity to reassess whether they have clear, enforceable standards for using data responsibly.

Privacy is no longer optional.

At the same time, halting data use entirely is not a sustainable solution.

What organizations need is not another tool or declaration, but a shared operational framework for making data decisions — one that aligns privacy, governance, and real-world AI use.

Where SynTitan Fits

In this context, AI-Ready data is not a buzzword or a one-time preparation step.

It is an operational standard for deciding which data can be used, for what purpose, under which constraints, and with what level of accountability.

This is the problem SynTitan is designed to address.

SynTitan helps organizations make data usable for AI by clarifying conditions, risks, and usage boundaries, rather than by moving or exposing raw data.

Teams can evaluate whether data is fit for specific AI and analytics use cases, document constraints, and prepare datasets that remain explainable and reviewable under privacy and regulatory requirements.

Privacy, in this approach, is not treated as a blocker.

It is the baseline assumption that shapes how data is handled from the start.

On Data Privacy Day, the critical question is no longer whether data should be protected.

It is whether your organization has a clear, repeatable standard for using data responsibly in AI-driven work.

AI succeeds not when data is simply available, but when decisions about data are explicit, explainable, and consistently applied.

That is the foundation SynTitan is built around.

CUBIG's Service Line

Recommended Posts